1. Prelim

本文使用PyTorch实现Transformer , 并进一步补充细节。

首先导入所需模块:

import copyimport mathimport numpy as npimport torchimport torch.nn as nn

其次提前设定关键参数:

embed_dim = 512 num_heads = 8 dropout = 0.01 max_len = 5000 d_k = d_q = embed_dim // num_heads d_v = d_k d_ff = 2048 num_layers = 6

2. Model Architecture

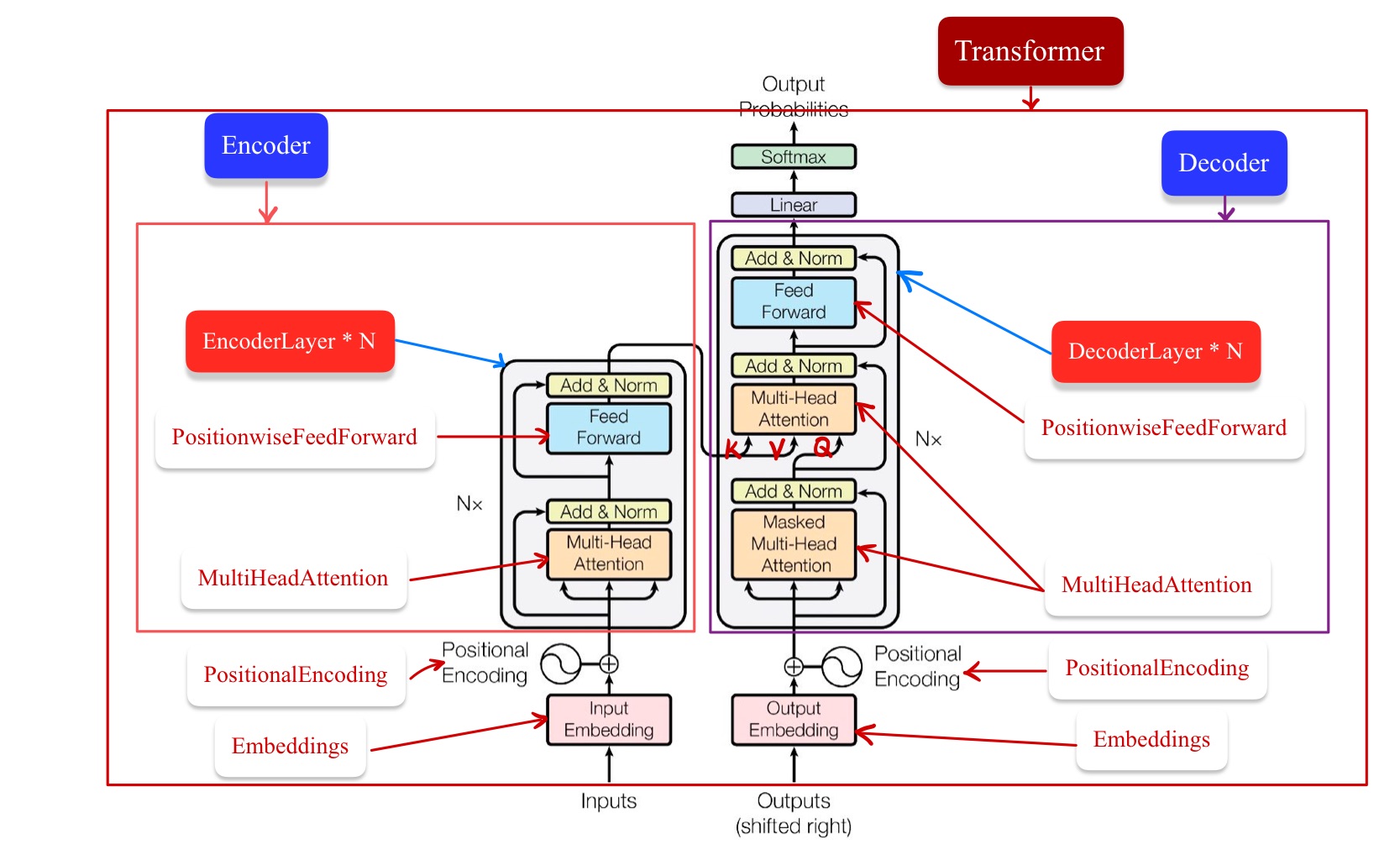

大部分热门的神经序列转导模型(Neural Sequence Transduction Model)都具有编码—解码架构 (Bahdanau, Cho, and Bengio, 2014) . Transformer也使用了这一架构:

Figure 1: Model architecture of Transformer (Vaswani et al., 2017) .

3. Embeddings and PositionalEncoding

3.1. Embeddings

Embeddings负责将输入的序列进行词嵌入,每个单词嵌入后,映射为维度为embed_dim的向量。比如说,有10个单词,当embed_dim=512时,我们得到一个形状为10 * 512的词嵌入矩阵,每一行代表一个单词。在forward函数中乘以\(\sqrt{\texttt{embed_dim}}\) 是因为nn.Embedding在初始化时,用的是xavier_uniform, 乘法运算是为了让最后分布的方差为\(1\) , 使网络在训练时的收敛速度更快。

class Embeddings (nn.Module ): def __init__ (self, vocab_size, embed_dim ): """ :param vocab_size: 当前语言的词典大小(单词个数),为src_vocab_size或tgt_vocab_size :param embed_dim: 词嵌入的维度 """ super (Embeddings, self).__init__() self.embed = nn.Embedding(vocab_size, embed_dim) self.embed_dim = embed_dim def forward (self, inputs ): """ :param inputs: 形状为(batch_size, seq_len)的torch.LongTensor,seq_len为src_len或tgt_len :return: (batch_size, seq_len, embed_dim)的张量,seq_len为src_len或tgt_len """ return self.embed(inputs) * np.sqrt(self.embed_dim)

torch.nn.Embedding有多个参数,我们主要关注num_embeddings与embedding_dim. num_embeddings即词典的大小尺寸,embedding_dim即嵌入向量的维度。

在做欧洲语系和英语翻译的时候,很多词是共享词根的,因此他们的源语言和目标语言共享一个权重矩阵 (Press and Wolf, 2017) . 但对于其它语言之间(如中文和英文),则没有共享权重矩阵的必要。

3.2. PositionalEncoding

PositionalEncoding的作用是添加位置信息。回顾位置编码的公式:

\[\begin{aligned}

\text{PE}(\text{pos}, 2i)&=\sin(\text{pos}/10000^{2i/d_\text{model}}) \\

\text{PE}(\text{pos}, 2i+1)&=\cos(\text{pos}/10000^{2i/d_\text{model}})

\end{aligned}\]

其中\(\text{pos}\) 指的是一句话中某个单词的位置,\(i\) 指的是词嵌入的维度序号。

class PositionalEncoding (nn.Module ): def __init__ (self, embed_dim, dropout=0.1 , max_len=5000 ): """ :param embed_dim: 词嵌入的维度 :param dropout: 在每次迭代训练时不参与训练的概率 :param max_len: 提前准备好的序列的位置编码的长度 """ super (PositionalEncoding, self).__init__() self.dropout = nn.Dropout(p=dropout) pe = torch.zeros(max_len, embed_dim) position = torch.arange(0 , max_len).unsqueeze(1 ) div_term = torch.exp(torch.arange(0 , embed_dim, 2 ) * -(np.log(10000.0 ) / embed_dim)) pe[:, 0 ::2 ] = torch.sin(position * div_term) pe[:, 1 ::2 ] = torch.cos(position * div_term) pe = pe.unsqueeze(0 ) self.register_buffer("pe" , pe) def forward (self, inputs ): """ :param inputs: Embeddings的词嵌入结果,为(batch_size, seq_len, embed_dim)的张量,seq_len为src_len或tgt_len :return: 词嵌入加上位置编码的结果,为(batch_size, seq_len, embed_dim)的张量,seq_len为src_len或tgt_len """ return self.dropout(inputs + self.pe[:, :inputs.size(1 ), :])

带nn.Dropout的网络可以防止出现过拟合,nn.Dropout(p=dropout)的意思是指该层的神经元在每次迭代训练时会随机有\(10\%\) 的可能性不参与训练。

torch.arange的结果并不包含end. 比如torch.arange(1, 3)输出tensor([1, 2]), 其类型为torch.int64. 又比如torch.arange(0, 512, 2)生成从\(0\) 到\(512\) 的偶数。

.unsqueeze()主要是对数据维度进行扩充。

register_buffer函数通常用于保存一些模型参数之外的值。

4. Encoder and Decoder

4.1. MultiHeadedAttention

Transformer是围绕着注意力(Attention)机制展开的,正如Vaswani等人 (2017) 提到的:

Attention mechanisms have become an integral part of compelling sequence modeling and transduction models in various tasks, allowing modeling of dependencies without regard to their distance in the input or output sequences.

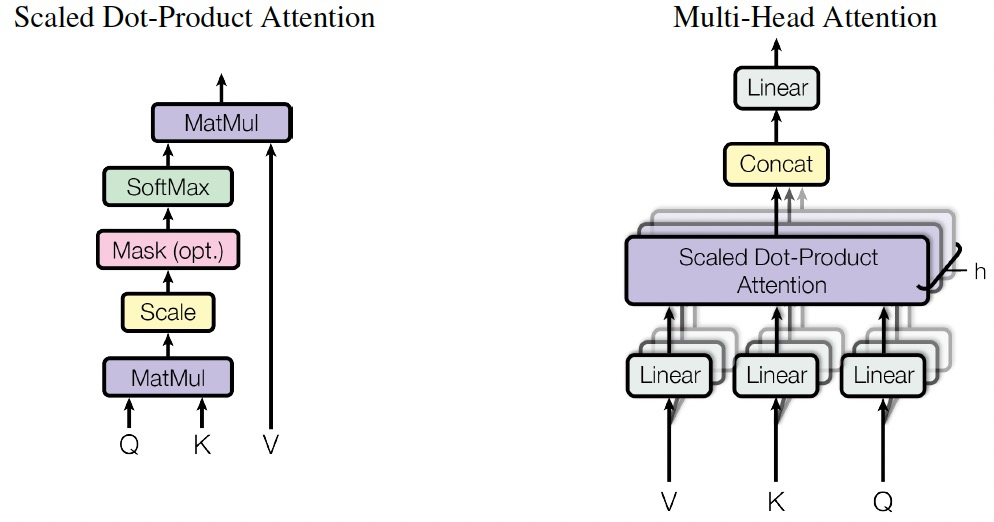

简而言之,注意力机制将序列中的数据点联系起来。Transformer使用一种特定类型的注意力机制,称为多头注意力(Multi-Head Attention)——这是模型中最重要的部分。多头注意力如下图所示:

Figure 2: (Left) Scaled dot-product attention. (Right) Multi-Head attention consists of several attention layers running in parallel (Vaswani et al., 2017) .

我们可以通过注意力构建多头注意力层,注意力公式输出值(Value)的加权平均,而权重来自查询(Query)和键(Key)的计算:\[\text{Attention}(Q, K, V)=\text{softmax}\left(\frac{QK^\top}{\sqrt{\dim(\mathbf{k})}}\right)V.\]

自注意力机制的核心就是通过\(Q\) 和\(K\) 计算得到注意力权重,然后再作用于\(V\) 得到整个权重和输出。模型在对当前位置的信息进行编码时,会将注意力集中于自身的位置,而可能忽略其它位置,因此其中一种解决方案就是采用多头注意力机制。同时,使用多头注意力机制还能够使注意力层输出包含不同子空间中的编码表示信息,从而增强模型的表达能力。

def attention (query, key, value, attn_mask=None , dropout=None ): """ :param query: (batch_size, head_num, q_len, d_q)的张量 :param key: (batch_size, head_num, k_len, d_k)的张量 :param value: (batch_size, head_num, v_len, d_v)的张量,其中v_len=k_len :param attn_mask: (batch_size, head_num, q_len, k_len)的张量 :param dropout: nn.Dropout(p) :return: 注意力结果,为(batch_size, head_num, q_len, d_v)的张量 """ d_k = key.size(-1 ) scores = torch.matmul(query, key.transpose(-2 , -1 )) / np.sqrt(d_k) if attn_mask is not None : scores.masked_fill_(attn_mask, -1e9 ) attn = nn.Softmax(dim=-1 )(scores) if dropout is not None : attn = dropout(attn) return torch.matmul(attn, value)

.matmul用于计算高维矩阵,比如维度为(batch_size, head_num, seq_length, num_features)的矩阵。

我们再定义一个clones函数,帮助我们复制相同的结构。

def clones (module, n ): """ :param module: 需要复制的结构 :param n: 复制的个数 :return: 复制的结构 """ return nn.ModuleList([copy.deepcopy(module) for _ in range (n)])

对于每一个头(Head),都使用三个矩阵\(W^Q\) , \(W^K\) 和\(W^V\) 把输入转换为\(Q\) , \(K\) 和\(V\) . 然后分别用每一个头进行自注意力的计算,最后把\(h\) 个头的输出拼接起来,用一个矩阵\(W^O\) 把输出压缩,具体的计算过程为:

\[\text{MultiHead}(Q, K, V)=\text{Concat}(\text{head}_1, \ldots, \text{head}_h)W^O\] 其中\(\text{head}_i=\text{Attention}(QW_i^Q, KW_i^K, VW_i^V)\) , \(W_i^Q \in \mathbb{R}^{d_\text{model} \times d_k}\) , \(W_i^K \in \mathbb{R}^{d_\text{model} \times d_k}\) , \(W_i^V \in \mathbb{R}^{d_\text{model} \times d_v}\) 以及\(W^O \in \mathbb{R}^{hd_v \times d_\text{model}}\) . 我们假设\(h=8\) 及\(d_k=d_v=d_\text{model}/h=64\) .

class MultiHeadAttention (nn.Module ): def __init__ (self ): super (MultiHeadAttention, self).__init__() self.dropout = nn.Dropout(p=dropout) self.q_weight = nn.Linear(embed_dim, d_q * num_heads, bias=False ) self.k_weight = nn.Linear(embed_dim, d_k * num_heads, bias=False ) self.v_weight = nn.Linear(embed_dim, d_v * num_heads, bias=False ) self.o_weight = nn.Linear(d_v * num_heads, embed_dim, bias=False ) def forward (self, q_inputs, k_inputs, v_inputs, attn_mask=None ): """ :param q_inputs: (batch_size, q_len, embed_dim)的张量 :param k_inputs: (batch_size, k_len, embed_dim)的张量 :param v_inputs: (batch_size, v_len, embed_dim)的张量,其中v_len=k_len :param attn_mask: (batch_size, q_len, k_len)的张量 :return: (batch_size, q_len, embed_dim)的张量 """ residual = q_inputs batch_size = q_inputs.size(0 ) if attn_mask is not None : attn_mask = attn_mask.unsqueeze(1 ).repeat(1 , num_heads, 1 , 1 ) q = self.q_weight(q_inputs).view(batch_size, -1 , num_heads, d_q).transpose(1 , 2 ) k = self.k_weight(k_inputs).view(batch_size, -1 , num_heads, d_k).transpose(1 , 2 ) v = self.v_weight(v_inputs).view(batch_size, -1 , num_heads, d_v).transpose(1 , 2 ) attn = attention(q, k, v, attn_mask, self.dropout) z = self.o_weight(attn.transpose(1 , 2 ).contiguous().view(batch_size, -1 , d_v * num_heads)) return nn.LayerNorm(d_v * num_heads)(z + residual)

4.2. PositionwiseFeedForward

PositionwiseFeedForward是一个全连接层,由两个线性变换以及它们之间的ReLU激活组成,即:\[\text{FFN}(x)=\max(0, xW_1+b_1)W_2+b_2.\]

class PositionwiseFeedForward (nn.Module ): def __init__ (self ): super (PositionwiseFeedForward, self).__init__() self.ffn = nn.Sequential( nn.Linear(embed_dim, d_ff), nn.ReLU(), nn.Dropout(dropout), nn.Linear(d_ff, embed_dim) ) def forward (self, inputs ): """ :param inputs: (batch_size, q_len, embed_dim)的张量 :return: (batch_size, q_len, embed_dim)的张量 """ return nn.LayerNorm(embed_dim)(self.ffn(inputs) + inputs)

4.3. EncoderLayer

EncoderLayer是由MultiHeadedAttention与PositionwiseFeedForward构成的:

class EncoderLayer (nn.Module ): def __init__ (self ): super (EncoderLayer, self).__init__() self.enc_attn = MultiHeadAttention() self.pff = PositionwiseFeedForward() def forward (self, enc_inputs, enc_mask ): """ :param enc_inputs: (batch_size, src_len, embed_dim)的张量 :param enc_mask: (batch_size, src_len, src_len)的张量 :return: (batch_size, src_len, embed_dim)的张量 """ return self.pff(self.enc_attn(enc_inputs, enc_inputs, enc_inputs, enc_mask))

4.4. Encoder

Encoder是由num_layers个相同结构的EncoderLayer堆栈而成。

class Encoder (nn.Module ): def __init__ (self ): super (Encoder, self).__init__() self.src_emb = Embeddings(src_vocab_size, embed_dim) self.pos_enc = PositionalEncoding(embed_dim) self.layers = clones(EncoderLayer(), num_layers) def forward (self, enc_inputs ): """ :param enc_inputs: 形状为(batch_size, src_len)的torch.LongTensor :return: (batch_size, src_len, embed_dim)的张量 """ enc_outputs = self.pos_enc(self.src_emb(enc_inputs)) enc_mask = padding_mask(enc_inputs, enc_inputs) for layer in self.layers: enc_outputs = layer(enc_outputs, enc_mask) return enc_outputs

4.5. DecoderLayer

DecoderLayer中大部分的类是重复使用的,但我们要注意:底层的MultiHeadAttention输入目标语言序列,而上一层的MultiHeadAttention则要考虑源语言和目标语言。

class DecoderLayer (nn.Module ): def __init__ (self ): super (DecoderLayer, self).__init__() self.dec_attn = MultiHeadAttention() self.dec_enc_attn = MultiHeadAttention() self.pff = PositionwiseFeedForward() def forward (self, dec_inputs, enc_outputs, dec_mask, dec_enc_mask ): """ :param dec_inputs: (batch_size, tgt_len, embed_dim)的张量 :param enc_outputs: (batch_size, src_len, embed_dim)的张量 :param dec_mask: (batch_size, tgt_len, tgt_len)的张量 :param dec_enc_mask: (batch_size, tgt_len, src_len)的张量 :return: (batch_size, tgt_len, embed_dim)的张量 """ dec_outputs = self.dec_attn(dec_inputs, dec_inputs, dec_inputs, dec_mask) dec_outputs = self.dec_enc_attn(dec_outputs, enc_outputs, enc_outputs, dec_enc_mask) return self.pff(dec_outputs)

4.6. Decoder

Decoder与Encoder也是类似的:

class Decoder (nn.Module ): def __init__ (self ): super (Decoder, self).__init__() self.tgt_emb = Embeddings(tgt_vocab_size, embed_dim) self.pos_enc = PositionalEncoding(embed_dim) self.layers = clones(DecoderLayer(), num_layers) def forward (self, dec_inputs, enc_inputs, enc_outputs ): """ :param dec_inputs: 形状为(batch_size, tgt_len)的torch.LongTensor :param enc_inputs: (batch_size, src_len)的张量 :param enc_outputs: (batch_size, src_len, embed_dim)的张量 :return: (batch_size, tgt_len, embed_dim)的张量 """ dec_outputs = self.pos_enc(self.tgt_emb(dec_inputs)) dec_pad_mask = padding_mask(dec_inputs, dec_inputs) dec_attn_mask = attention_mask(dec_inputs, dec_inputs) dec_mask = torch.gt(dec_pad_mask + dec_attn_mask, 0 ) dec_enc_pad_mask = padding_mask(dec_inputs, enc_inputs) dec_enc_attn_mask = attention_mask(dec_inputs, enc_inputs) dec_enc_mask = torch.gt(dec_enc_pad_mask + dec_enc_attn_mask, 0 ) for layer in self.layers: dec_outputs = layer(dec_outputs, enc_outputs, dec_mask, dec_enc_mask) return dec_outputs

Transformer的结构相对比较简单,它包括Encoder和Decoder, 以及剩下的Linear层和Softmax层:

class Transformer (nn.Module ): def __init__ (self ): super (Transformer, self).__init__() self.encoder = Encoder() self.decoder = Decoder() self.linear = nn.Linear(embed_dim, tgt_vocab_size, bias=False ) def forward (self, enc_inputs, dec_inputs ): """ :param enc_inputs: (batch_size, src_len)的张量 :param dec_inputs: (batch_size, tgt_len)的张量 :return: (batch_size*tgt_len, tgt_vocab_size)的张量 """ enc_outputs = self.encoder(enc_inputs) dec_outputs = self.decoder(dec_inputs, enc_inputs, enc_outputs) dec_logits = self.linear(dec_outputs) return dec_logits.view(-1 , dec_logits.size(-1 ))

6. Mask

在Encoder和Decoder中都需要考虑Padding Mask,而Encoder和Decoder有一个关键的不同则是Decoder在解码第\(t\) 个时刻的时候只能使用\(1, \ldots, t\) 时刻的输入,而不能使用\(t+1\) 时刻及其之后的输入。因此我们需要两个函数来产生不同的Mask矩阵。

def attention_mask (seq1, seq2 ): """ :param seq1: (batch_size, seq1_len)的张量 :param seq2: (batch_size, seq2_len)的张量 :return: (batch_size, seq1_len, seq2_len)的张量 """ attn_shape = (seq1.size(0 ), seq1.size(1 ), seq2.size(1 )) attn_mask = np.triu(np.ones(attn_shape), k=1 ) return torch.from_numpy(attn_mask).bool () def padding_mask (q, k ): """ :param q: (batch_size, seq_len)的张量,seq_len为src_len或tgt_len :param k: (batch_size, seq_len)的张量,seq_len为src_len或tgt_len :return: (batch_size, q_len, k_len)的张量,填充部分为True,非填充部分为False """ batch_size, q_len = q.size() k_len = k.size(1 ) return k.data.eq(0 ).unsqueeze(1 ).expand(batch_size, q_len, k_len)

np.triu生成一个三角矩阵,k=1表示第\(k\) 条对角线以下都设置为0.

7. Full Model

我们现在生成完整的模型:

8. Reference

Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv Preprint arXiv:1409.0473 , 2014.

Harvard NLP. The Annotated Transformer. Online: http://nlp.seas.harvard.edu/2018/04/03/attention.html , 2018.

Ofir Press and Lior Wolf. Using the Output Embedding to Improve Language Models. arXiv Preprint arXiv:1608.05859 , 2017.

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention Is All You Need. In 31st Conference on Neural Information Processing Systems , 2017.